Cluster Operation¶

Allegra can be operated in a clustered environment, i.e. one Allegra instance can run on multiple servers. This section gives examples of possible configurations and explains how Allegra behaves in a clustered environment.

Cluster configurations¶

There are two types of clusters for web applications: High Availability (HA) clusters and load balancing clusters.

With a high-availability cluster, you can improve the availability of the Allegra service, for example, to ensure a 24/7 item. An HA cluster operates with redundant nodes that are used to provide services in the event of a system component failure. The most common size for an HA cluster is two nodes, which is the minimum requirement for redundancy. By using redundancy, the HA cluster implementations eliminate single points of failure.

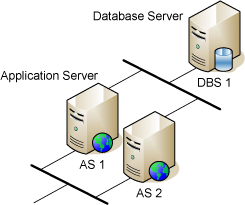

The figure below shows a simple HA cluster with two application servers and one database server. The database server is not redundant, but it would be possible to use one of the application servers as a redundant database server. The router that connects application server 1 and application server 2 is not shown here.

The purpose of a load-balancing cluster is to distribute a workload evenly across multiple backend nodes. Typically, the cluster will have multiple redundant load-balancing front ends. Since each item in a load-balancing cluster must provide full service, it can be thought of as an active/HA cluster where all available servers are processing requests.

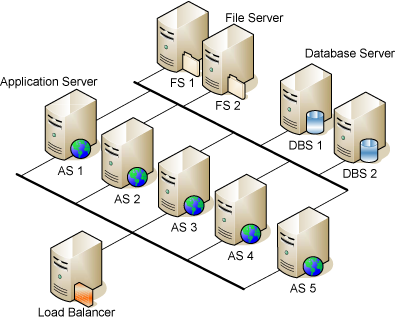

The following figure shows a fairly large configuration with a load balancer, five application servers, two database servers and two file servers.

All application servers operate on a single database that is redundantly served by two database servers. The attachments and full-text search indexes are kept on a single file server, which also has a backup. The backup of the file server is left to the operating system as Allegra does not provide support for it.

The database management system must take care of synchronization between the original database server and the backup database server.

All communication between the various Allegra application servers in a cluster is handled by the database. There is no need to create special ports for communication between the application servers or to use patched Java virtual machines.

Note

Allegra does not require any configuration to run in a clustered environment. When you connect multiple Allegra instances to the same database, Allegra automatically enters cluster mode. However, you need an appropriate license key that includes all application servers that you connect to that database.

Many other configurations are possible. For example, one of the application servers can also be the file server. Or the database server and the file server can be installed on the same hardware. Allegra itself doesn’t care about any of this, as long as you make sure you have exactly one access point (JDBC URL) for the database and exactly one file path to the attachments and indexes directories for all Allegra instances of this cluster.

Startup Behavior¶

When an Allegra instance is started, it registers itself as a node in the database.

It then searches the database for other nodes. If there are nodes in the database that

have not updated their entry within a certain timeout period (default is 5 minutes, can be set in the

WEB-INF/quartz-jobs.xml file), this entry will be deleted from the table.

After that, Allegra will try to set itself as master node, unless there is already another node that is marked as the master node. If this is the only node, the the item succeeds immediately. Otherwise, it may take until the timeout period for a new master node to be fully established.

The master node is responsible for the following:

Updating the full text search index

Retrieving emails from an email server, if the transmission of articles by e-mail is enabled.

Otherwise, the master node behaves like a normal node.

Master node fails¶

If the master node fails, the full-text search is temporarily not updated. However, no activities that require an update of the full-text search index are lost. are lost; they are stored in the database.

In addition, retrieval of emails from the email server is temporarily disabled. Again, no entries are lost as they are stored on the email server.

After a maximum of one timeout period (default value is 5 minutes, set in

the WEB-INF/quartz-jobs.xml file) the negotiation between the remaining nodes

as to who will replace the original master node starts.The result is random. The new

master node starts updating the full-text search index and retrieving emails from the

email server.

Thus, the failure of a master node leads to a slightly reduced performance and a delay of about 5 minutes in updating the full-text search index.

Failure of a regular node¶

When a regular node fails, there is some performance degradation.

The other nodes take less than the timeout period (default is 5 minutes,

set in the WEB-INF/quartz-jobs.xml file) to detect that a node has failed.

However, this has no further consequences.

Forced change of master node¶

Normally, all application nodes negotiate among themselves as to who should become the master node. The result is random.

However, it is possible to force one of the nodes to become the master node. To do this, the current master node must be instructed to perform its items related to the master node items, such as updating the full-text search index, and making room for the new master node. This process may take up to the timeout period (5 minutes by default) before the new node can assume its responsibilities as the new master node.